Transfer Learning: Accelerating Computer Vision Applications with Pre-Trained Models

When building computer vision applications, leveraging pre-trained models can significantly speed up the development process and yield faster progress. Instead of training a neural network from scratch, you can download pre-trained weights and use transfer learning to adapt the model to your specific task. In this blog post, we will explore the concept of transfer learning and how it can be applied to computer vision tasks for efficient and effective results.

The Power of Pre-Trained Models

The computer vision research community has made significant contributions by posting numerous datasets online, such as ImageNet, MS COCO, and Pascal, which serve as valuable resources for training neural networks. These datasets have been used to train models with high-performance accuracy over several weeks or months, utilizing powerful GPUs and complex optimization techniques. By downloading these pre-trained models, you can benefit from the knowledge and expertise that went into their creation, saving you time and effort.

Transfer Learning for Custom Tasks

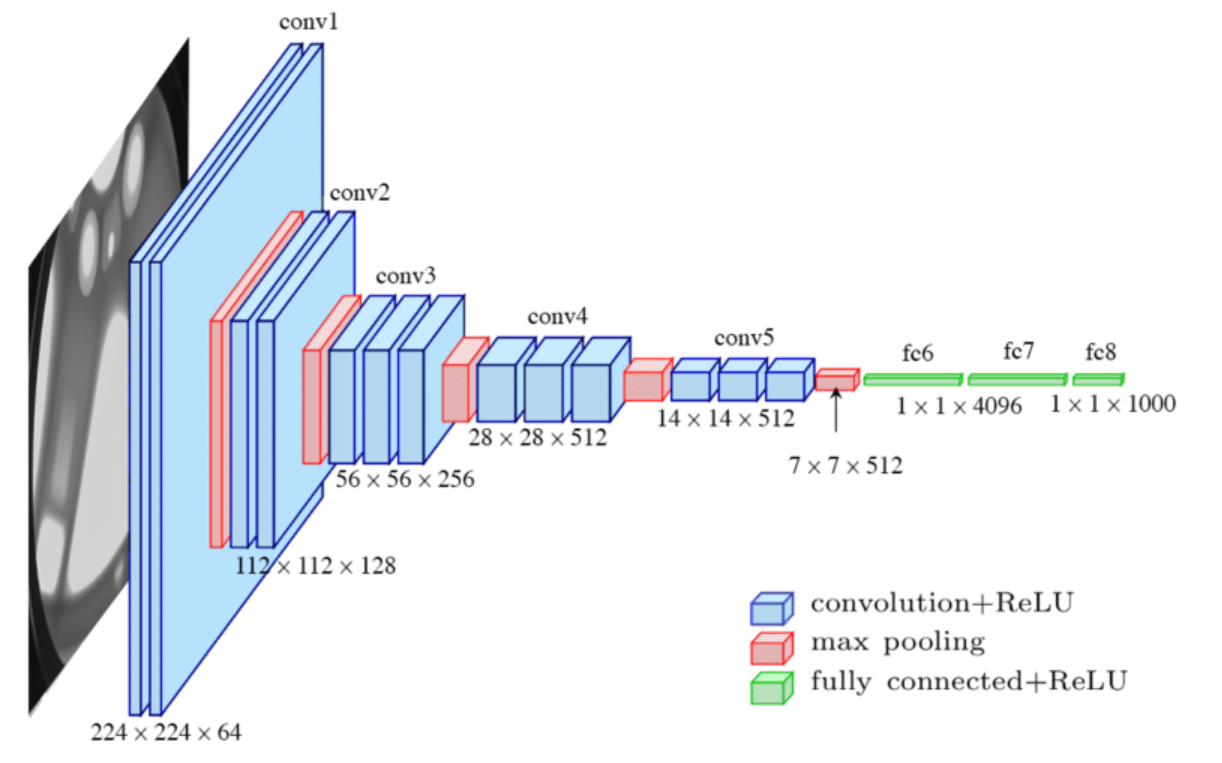

Let’s consider an example where you want to build a cat detector to recognize your own pet cat. Assuming you have a limited number of images of your cats, you might encounter a data scarcity issue. In such cases, transfer learning becomes invaluable. You can download a pre-trained neural network along with its weights, originally trained on a large dataset like ImageNet, which contains a thousand different classes.

To adapt the pre-trained model to your specific task, you need to modify the output layer. Instead of predicting one of the thousand classes, you create a new softmax layer with three possible outputs: Tigger, Misty, or neither. The key idea is to freeze the parameters of all the earlier layers in the network and only train the parameters associated with your softmax layer.

Freezing and Training Layers

Most deep learning frameworks support freezing layers, allowing you to specify which layers’ weights should remain unchanged during training. By freezing earlier layers, which have learned general features, you leverage their fixed functionality to compute feature vectors for input images. These feature vectors are then used as input for training a shallow softmax model that predicts the desired classes.

To speed up training, you can pre-compute the activations of these frozen layers for all examples in the training set and save them to disk. This way, you don’t need to recalculate the activations for every epoch or pass through the training set, resulting in more efficient computations.

Adapting to Different Dataset Sizes

The amount of labeled data you have for your task plays a crucial role in determining the extent of transfer learning. If you have a small training set, freezing more layers and training only a few parameters might suffice. However, with a larger labeled dataset, you can consider freezing fewer layers and training more parameters in the network.

For datasets with ample data, you can even replace the last few layers or add your own hidden units, followed by the softmax outputs. This allows you to fine-tune the network’s higher-level representations to better suit your specific task.

Embracing Transfer Learning in Computer Vision

Transfer learning has proven to be highly effective in computer vision applications. By utilizing pre-trained models, you can leverage the knowledge gained from extensive training on large datasets. This approach significantly improves performance, especially when you have limited labeled data or computational resources.

Unless you have an exceptionally large dataset and sufficient computational resources to train from scratch, transfer learning is an approach worth considering in computer vision. It allows you to benefit from existing expertise, save valuable time, and achieve competitive results with smaller training sets.

In conclusion, transfer learning offers a powerful strategy to accelerate the development of computer vision applications. By harnessing pre-trained models, you can efficiently adapt them to your specific tasks, overcoming data limitations and achieving impressive results. Embrace transfer learning and unlock the potential of computer vision in

your projects.