Data Augmentation

Deep Learning for Computer Vision: Navigating the Landscape

Deep learning has made significant advancements in various domains such as computer vision, natural language processing, speech recognition, online advertising, and logistics. However, when it comes to computer vision, there are unique challenges and considerations that researchers and practitioners must navigate. In this article, we explore some observations and insights shared in a speech about deep learning for computer vision, aiming to provide guidance in understanding the literature and building effective computer vision systems.

The Spectrum of Data Availability

Machine learning problems can be categorized based on the amount of available data. For instance, speech recognition has relatively abundant datasets compared to the complexity of the problem. On the other hand, image recognition or classification, which involves analyzing pixel-level information, still requires more data to achieve optimal performance. Object detection, a task that involves identifying and locating objects within images, often faces an even greater scarcity of data due to the cost of labeling objects and bounding boxes.

The Role of Data and Hand-Engineering

When it comes to training machine learning models for computer vision tasks, data and hand-engineering play crucial roles in achieving optimal performance. The sources of knowledge can be categorized into two main types:

1. Labeled Data

Labeled data serves as a direct source of information for supervised learning algorithms. It consists of pairs of input data (x) and corresponding labels (y), allowing the algorithm to learn patterns and make accurate predictions. The availability of labeled data is essential for training machine learning models effectively. In the context of computer vision, the speaker emphasizes that despite the existence of reasonably large datasets for image recognition or classification, the demand for more labeled data remains prevalent. Analyzing vast amounts of pixel information and identifying objects accurately necessitates substantial labeled data, which is often limited in the field of computer vision.

2. Hand-Engineering

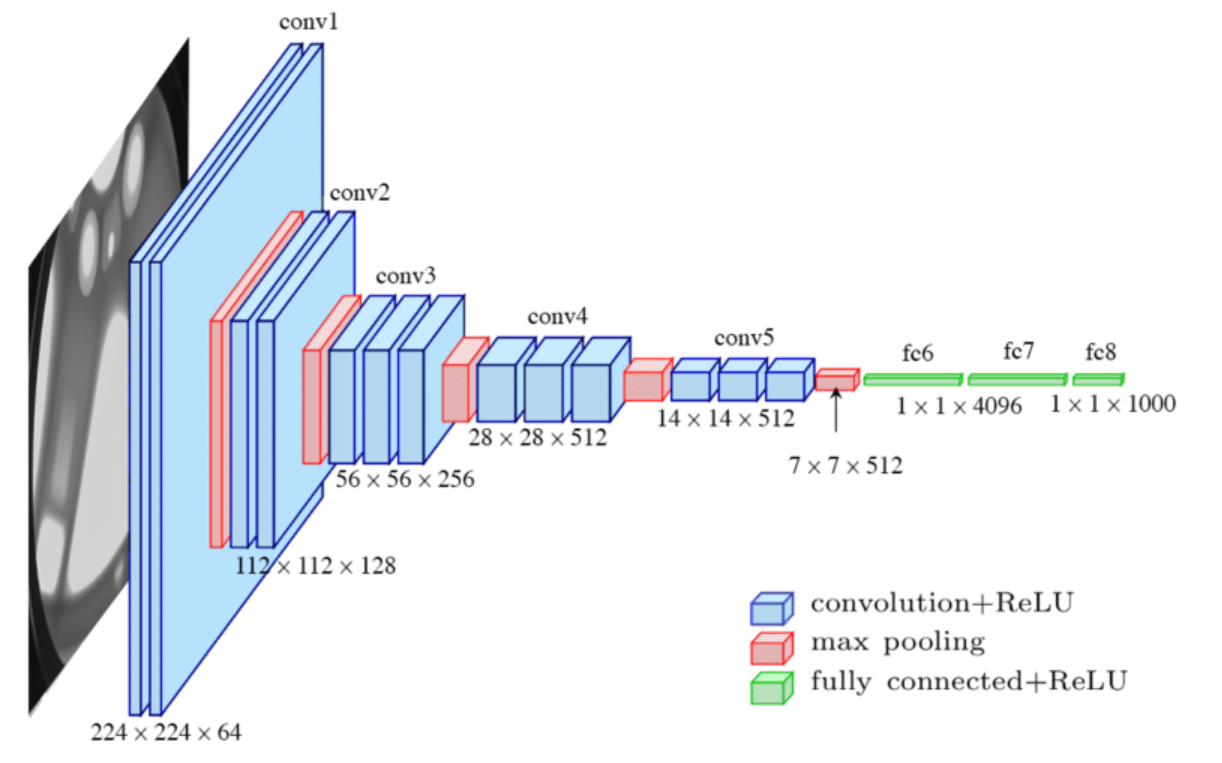

Hand-engineering refers to the process of designing features, network architectures, and other components of the system manually. In scenarios where abundant labeled data is not available, hand-engineering becomes crucial in compensating for the scarcity of data. It involves leveraging domain knowledge, intuition, and expertise to extract relevant features and design effective network architectures. Hand-engineering plays a significant role in achieving good performance when data is limited, as it allows the model to learn from the available data more efficiently.

Challenges in Computer Vision

Computer vision tasks entail learning complex functions, and even with the growing size of datasets, data scarcity remains a challenge. As a result, computer vision has historically relied heavily on hand-engineering. Complex network architectures have emerged to overcome the limitations of data availability. These intricate architectures often require meticulous hyperparameter tuning and exhibit more complexity compared to other domains. Object detection, with its smaller datasets, demands even more specialized components and algorithms.

Leveraging Transfer Learning

Transfer learning is a valuable technique in computer vision, especially when working with limited data. It involves utilizing pre-trained models on related tasks to boost performance on the target task. Transfer learning has shown significant improvements in areas such as object detection, where limited labeled data is available. By leveraging pre-existing knowledge captured in pre-trained models, the performance on the target task can be enhanced.

Balancing Benchmarks and Real-World Applications

The computer vision community places significant emphasis on achieving high performance on standardized benchmark datasets and winning competitions. While this focus helps identify effective algorithms, it sometimes leads to techniques that are not suitable for real-world applications. Here are some tips to navigate benchmarks effectively:

- Ensembling : Ensembling involves training multiple neural networks independently and averaging their outputs. It is a technique commonly used to improve benchmark performance. However, due to increased computational requirements, it is rarely employed in production systems.

- Multi-Crop at Test Time : Multi-crop at test time is a technique involving data augmentation during testing. It can enhance performance on benchmarks but may significantly impact runtime and is not commonly used in production systems.

These techniques should be carefully considered, keeping in mind the trade-offs between benchmark performance and real-world applicability.

Leveraging Existing Architectures

When building production systems, leveraging pre-existing neural network architectures can be advantageous. Open-source implementations provide ready-to-use architectures that can be fine-tuned on specific datasets, saving time and effort. These pre-trained models have often undergone extensive training on large datasets, allowing for a faster start in real-world applications. However, training networks from scratch is still an option for those with sufficient computational resources and expertise.

In conclusion, deep learning has revolutionized computer vision, but challenges persist due to limited data availability. Hand-engineering and specialized network architectures have been instrumental in compensating for data scarcity. Techniques like transfer learning and leveraging pre-trained models can significantly improve performance in small data regimes. While achieving high benchmarks is important, it is crucial to differentiate between techniques suitable for competitions and those applicable to real-world production systems.

Understanding the nuances and techniques specific to computer vision enables researchers and practitioners to navigate the field effectively and build robust computer vision systems.